Rylan Schaeffer

Resume

Publications

Learning

Blog

Teaching

Jokes

Kernel Papers

Towards an Improved Understanding and Utilization of Maximum Manifold Capacity Representations

Alt Title: Information Theory, Double Descent, Neural Scaling Laws and Multimodality in Maximum Manifold Capacity Representations

Authors: Rylan Schaeffer, Victor Lecomte, Dhruv Bhandarkar Pai, Andres Carranza, Berivan Isik, Alyssa Unell, Mikail Khona, Thomas Yerxa, Yann LeCun, SueYeon Chung, Andrey Gromov, Ravid Shwartz-Ziv, Sanmi Koyejo

Venue: Arxiv 2024

Quick Links

Summary

🔥🚀Towards an Improved Understanding and Utilization of Maximum Manifold Capacity Representations🚀🔥

w/ @vclecomte @BerivanISIK @dhruv31415 @carranzamoreno9 @AlyssaUnell @KhonaMikail @tedyerxa

Sup: @sanmikoyejo @ziv_ravid @Andr3yGR @s_y_chung @ylecun

1/N

MMCR is a new high-performing self-supervised learning method at NeurIPS 2023 by @tedyerxa @s_y_chung @KuangYilun @EeroSimoncelli at @NYU_CNS & @FlatironCCN 🚀🚀🚀

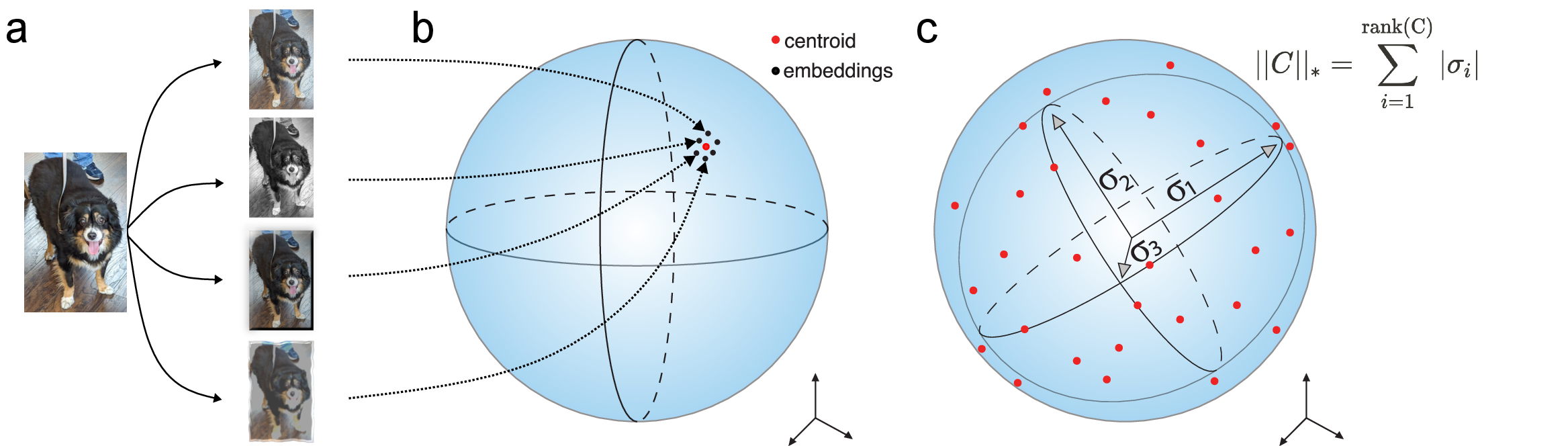

MMCR: Data -> K transforms per datum -> Embed -> Average over K transforms -> Minimize negative nuclear norm

2/N

MMCR originates from the statistical mechanical characterization of the linear separability of manifolds, building off foundational work by @s_y_chung @UriCohen42 @HSompolinsky

But most SSL algorithms originate in information theory - can we understand MMCR from this perspective?

3/N